In the US, lawmakers aren’t just debating how artificial intelligence should be used. They’re arguing over how fast it should move, how tightly it should be controlled, and who gets to decide the rules. If you build, sell, or operate Cloud services, this matters more than you might think.

At the center of the fight are some familiar splits: speed versus safety, federal power versus state control, and that old classic… innovation versus regulation.

And right in the middle of it all? Cloud providers. Because almost every serious AI system runs on Cloud infrastructure.

Trump wants speed. States want seatbelts.

President Donald Trump’s executive order, Ensuring a National Policy Framework for Artificial Intelligence, is blunt in its intent. The US should move fast. Really fast.

The order pushes for a single national approach to AI regulation, with as few obstacles as possible. It frames AI as a strategic asset ensuring economic growth, global leadership and competitive advantage. Safety is mentioned, but it’s not the headline act.

One of the most eye-catching elements is the creation of an AI Litigation Task Force that Trump called for in December. Its job would be to challenge state-level AI laws that are seen as slowing innovation or conflicting with federal priorities.

In other words: fewer local rules, less fragmentation, and one single rulebook—preferably a thin one.

The idea behind this is that the US should not regulate itself into second place.

The need for AI regulation

Many states aren’t convinced. While Washington talks acceleration, several states are tapping the brakes.

New York’s Responsible AI Safety and Education (RAISE) Act is a good example. It doesn’t ban AI. It doesn’t kill innovation. But it does insist on guardrails.

The Act focuses on large, powerful AI models—the kind that could cause real-world harm if things go wrong.

It introduces requirements around safety planning, incident reporting, and accountability; not because AI is evil, but because complex systems fail in complex ways.

This isn’t “move fast and break things”—rather, think of it as “move fast, but write things down and tell someone if it explodes.”

Other states are exploring similar ideas around transparency, oversight and clear responsibility when things break.

Why AI regulation matters for Cloud providers

AI doesn’t float in the air. It runs on servers. In data centers. Connected by networks. Managed by platforms.

Which means regulation aimed at AI doesn’t stop with model developers. It ripples straight into the Cloud.

Here’s what’s at stake.

First: regulatory fragmentation

From a Cloud provider’s perspective, 50 different AI rulebooks is nobody’s idea of fun.

Different reporting rules.

Different definitions of “high-risk.”

Different compliance expectations, depending on geography.

That complexity costs money, slows deployments, and favors large players with big legal teams.

Trump’s push for a national framework appeals to Cloud providers for one simple reason—it reduces complexity. One standard is easier than many. But state-level laws aren’t going away quietly—and until there’s real federal legislation, fragmentation is part of the landscape.

Second: compliance is becoming a Cloud feature

Safety requirements don’t just affect AI developers. They shape how Cloud platforms are built.

Expect more demand for:

• Audit logs for AI workloads

• Clear data lineage and traceability

• Built-in monitoring for model behavior

• Tools that support regulatory reporting

This isn’t theoretical. If customers are legally required to document AI risks, they’ll expect their Cloud provider to help.

Compliance won’t be a checkbox. It’ll be a selling point.

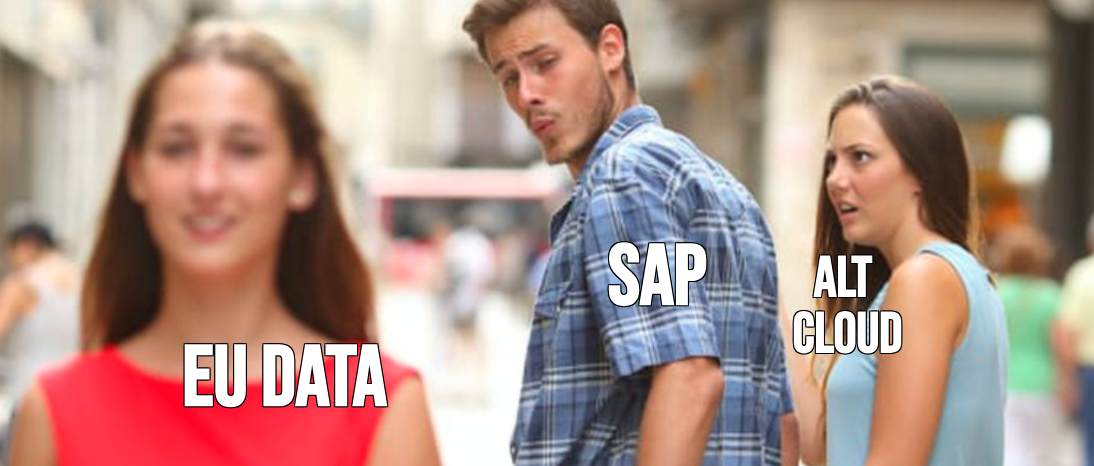

Third: data sovereignty moves from “nice to have” to strategic weapon

This is where Europe enters the chat.

While the US argues about speed versus safety, Europe has taken a different route. Clearer rules. Stronger emphasis on risk categories. Heavy focus on data protection and sovereignty.

For Cloud providers operating in Europe, this creates an opportunity.

Data residency, regional Clouds, sovereign infrastructure, and clear separation of jurisdictions aren’t just compliance tools—they’re powerful differentiators.

For global enterprises, especially in regulated sectors, the question isn’t “Where is the cheapest compute?” It’s “Where do I feel safest running this workload?”

The US federal approach prioritizes momentum: remove friction and let the market run. Meanwhile, some US states prioritize caution. Build rules as the technology matures and fix problems early.

Europe, on the other hand, prioritizes structure: define risk, set expectations, and enforce them. (Though it’s worth noting that some of the rules and timings in the EU AI Act have recently been loosened to reduce the burden of compliance.)

None of these approaches are accidental. They reflect political culture, legal tradition, and economic strategy.

For Cloud providers, this means one thing. There is no single “AI Cloud” strategy anymore.

In the US, Cloud platforms may lean into scale, speed, and rapid experimentation. Fast deployment. Massive compute. Fewer questions.

In Europe, Cloud platforms may lean into trust, transparency, and control. Clear boundaries. Strong governance. Predictable compliance.

Neither is wrong (or necessarily right)… they are different. And customers will choose accordingly.

What smart Cloud providers are doing now

The smartest Cloud providers aren’t waiting for the dust to settle. They’re preparing for a world of complex AI regulation.

That means:

• Designing infrastructure with compliance in mind

• Offering region-specific AI and data services

• Building tooling that helps customers meet regulatory obligations

• Being explicit about where data lives and how it’s handled

It also means paying attention to politics. Because in AI, policy is now a performance issue.

Cloud providers are no longer neutral plumbing. They’re foundational players in the AI ecosystem. Those that can adapt fastest—without breaking trust—will be the ones that win.

CloudFest is an open forum where the world’s Cloud community works together to shape our future. See you there!